26 Mar 2022

The web has evolved into the most powerful and ubiquitous app deployment platform. We're used to rich in-browser apps that connect to external services and support collaborative real-time experiences. Developer tooling is no different, with on-line development environments (IDEs) and management consoles for front and back ends.

But the servers are still distinct entities and not part of the apps themselves. That all changed when StackBlitz recently introduced WebContainers to the developer community.The rules just changed.

- [Updated 2022-03-28: added developing on a Chromebook and quick preview]

- [Updated 2022-03-27: added a note about secrets and expanded summary]

The web has rapidly grown into a powerful device-independent platform for apps as well as content. New capabilities appear in browsers in a steady trickle but every now and then a significant jolt occurs. For example, standards like CSS Grid, WebAudio, WebAuthentication, Portable Web Apps (PWAs) and Web Assembly (WASM) have had a big impact on what developers can do with the web to provide rich functionality and excellent user experiences.

Google Maps was an early example of how the web supports rich and powerful user experiences. Now, users expect to work on their data in apps when they are online or offline and synchronised across multiple devices with different capabilities.

As many front ends have become more JavaScript centric, several hosted IDEs appeared that provide rich development environments for front end development. These show an editor side-by-side with a view of the web content.

A shout out here to Microsoft Visual Studio code for providing a solid web technology IDE based on their Monaco editor which is being used in developer focussed web apps. Plus a shout out to Google V8 browser core powered the initial desktop version of VS Code using Electron.

These online IDEs, and other web development services including no/low code, still use servers, though they are largely abstracted away from the developers. And of course developers often manage their own servers for ownership or more complex deployments. These servers support the deployment build chain and runtime for both web pages API endpoints tha rich web apps require. Servers also typically run developer tooling and code platforms as an alternative to using a local workstation.

But what if rather than being separate the backend and web servers could also be deployed in-browser alongside the frontend code? What would that even mean? What new capabilities would be enabled?

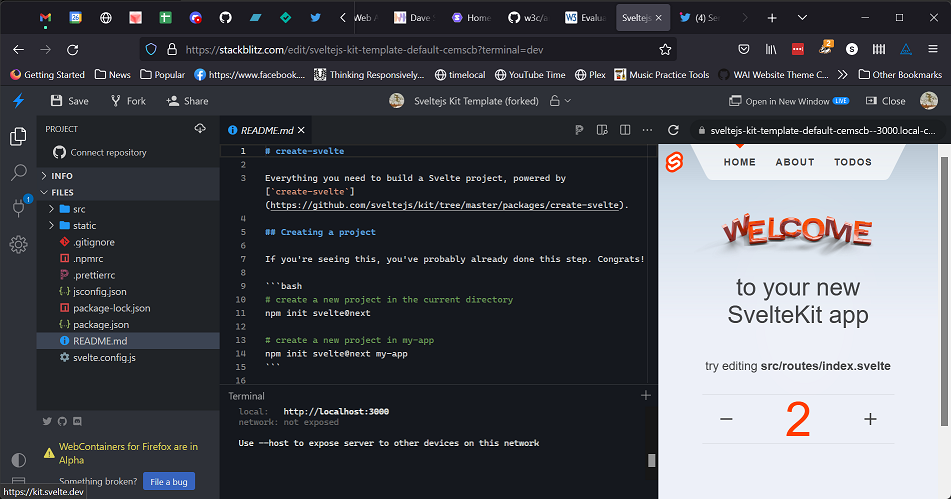

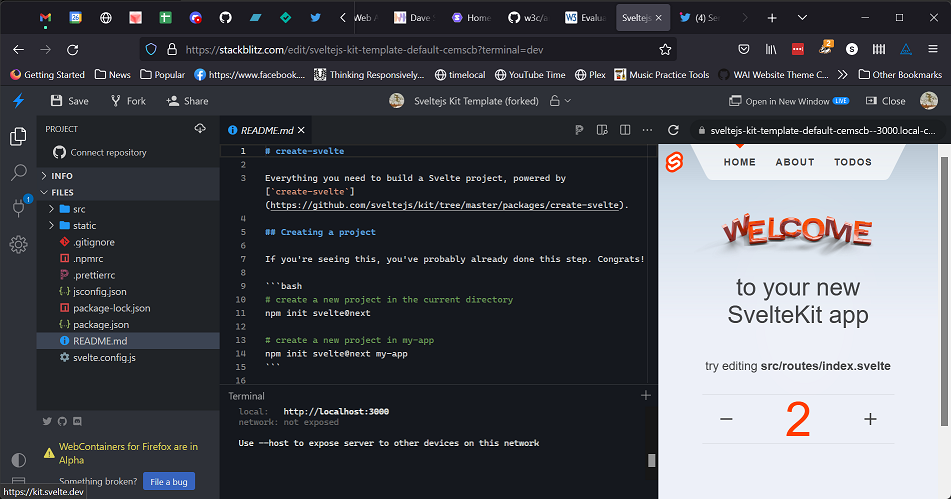

Well you can now try this for yourself, thanks to the groundbreaking StackBlitz service built on their WebContainer technology. Here’s an example that runs a full stack SvelteKit app. It boots up in seconds. I think you will be impressed.

WebContainters

Containers hit the developer world a while back and had a massive impact on system development practices and backend architectures. These lightweight virtual machines provide a standard way to package and deploy systems code. They are isolated yet share the services of the underlying Operating System (Linux or Windows)

What StackBlitz have created, and are building their business on, are containers that run in a web browser. Yes, that right, this is impressive feat is now feasible with the fantastic modern browsers. And what’s more, they run really fast too.

WebContainers provide a complete linux-style operating system (OS) complete with the kernel and a minimal bash command line environment. This is accessed via a terminal window in the StackBlitz web app.

In order to provide developer tooling and a web server, you also get nodejs and a custom npm clone design for speed and security. I hear that more POSIX conformance is being considered too.

In addition, vitejs is included. While ESM modules could be used explicitly with modern browsers, vite adds useful module import features such as node_modules module resolution and import of css files.

This is “no mean feat” and has to be experienced to be believed. I don’t know how much has been implemented from scratch or is a port like the embedded ARM ports of Linux. But the browser context is distinct from even embedded platforms.

The key enabling technology here is WebAssembly (WASM) which enables code to be cross compiled to run in a JavaScript engine. Plus, Service Workers, which provide the required “process” for the container to run in, separate from the frontend HTML browser context. The container exposes the usual socket/port for the browser front end to connect to.

To provide a complete fullstack integrated development environment (IDE), StackBlitz adds a VS Code editor and file system browser. They also provide GitHub integration for version control. Soon, I understand GitHub will be available as the file system as well, providing code security and common developer workflows. The frontend preview can be opened in its own tab/window as well.

The final touch is a set of predefined containers covering a range of back and front end technologies. These are opened with a click, landing you in the IDE ready to go once the WebContainer boots and serves the web pages..

The power of a URL for developers

What this means is that given a URL you get a fully functional nodejs server as well as the frontend app, which is also served from the container. This is all running in the browser window / tab. You can then use the powerful browser F12 developer tools to debug BOTH front and backend.

The reason I say this is “web native” development is that apart from running the backend in the browser, you get to leverage the core super power of the web. The URL!

URLs underpin the RESTful web architecture. They identify a resource and you can share them, embed them in content, use them in apps, even put them in a QR code. With StackBlitz that means there are now many ways to access a full stack app running in a web browser.

As developers often want to leverage existing code and modify it, StackBlitz provides as simple way to use the common “fork” pattern. This gives you a complete new copy of the full-stack, ready to work on. With the coming improved GitHub integration that’s going to neatly fit existing developer workflows too. This could open up new ways to make and accept community contributions via Pull Requests.

As browser’s support multiple tabs you can have multiple web apps running at once. For example, to compare a fork or Pull Request. They are completely isolated, neatly avoiding the common problem experienced on developer machines of conflicting versions of globally installed tools or dependencies.

Under the bonnet (hood), I expect a URL simply refers to a text based container configuration resource. The StackBlitz code turns that into the fullstack running app representation. This means not only low server costs for StackBitz but also, as static assets, they can be pushed to the edge. Thus providing very low latency for an even faster developer experience.

Other benefits

This is much more than ultra cool technology. And it is definitely that! It could have a huge impact on how developers deploy code and work together.

There are some good security gains too. For example, there’s less over-the-wire traffic. The code also runs in the deliberately secure browser sandbox. There’s also no public server attack service to secure. Currently, WebContainers expose the one port with access limited to the same browser.

Reliability is boosted by the being able to restart the container and build the stack with a simple browser refresh. The lightning fast speed makes this usable.

I’m convinced part of the reason the web exploded was the ability to “View Source” enabling developers to see how code worked. StackBlitz expands this powerful visibility to the server code as well. Of course the caveat is that much terrible code can easily get propagated.

You need to be very careful with your secrets like API tokens. Unless you keep them out of the server source you are going to being giving away access if you ever share(d) your StackBlitz URL (as is most likely). Keeping them in a local file will work for a single developers but a more secure solution will be needed for teams.

For educational uses, embedding a URL in a course, blog or even a presentation provides instant access to running code! What could be better.

The compute model is basically Bring You Own Device (BYOD). There’s no need to pay high PAYG server runtime costs.

When the improved GitHub integration lands, StackBlitz will provide a quick way to preview any branch or PR, without needing a Continuous Deployment service (though they do have many benefits).

One last benefit is you can develop fullstack on a low cost Chromebook (or a PC refreshed with ChromeOS). I can never get the Linux Integration working on ChromeOS, but that’s not an issue no we haveStackBlitz.

Limitations

The editor is basic and is smissing language services and other useful plugins that are part of the nice experience in VS Code. Perhaps that will change soon. At least Prettier is included.

For now at least, the backend is limited to a nodejs server with a simple architectures. That may not be a restriction for many but there are other popular web frameworks. For example Ruby is still very popular, as is PHP.

When you wish to deploy elsewhere there is no easy one-click option, though it’s not that hard to do. Static websites, for example SSG generated, are easy enough to deploy with can push of the source to git can trigger a CI/CD service like Vercel or Netlify. The backend code can also be deployed to PaaS or other environments supporting server configuration.

However, I expect serverless will prove to be the best fit as functions can run in the WebContainer as well be deployed to a service. Refactoring stateless server code into multiple separate serverless functions is easy enough to do. Deployment could then be automated, perhaps using something like the Serverless project to provide vendor neutrality of Function bindings.

Blue sky

It’s going to be exciting to see what new developer ideas StackBlitz WebContainer seed.

For example, how about multiple WebContainers each running locally in its own browser tab providing a microservice like architecture? In browser Kubernetes orchestration anyone?

Summary

The key benefits I see of StackBlitz are in how a fullstack web app can now can have painfree deployment with easy sharing and forking. Plus, the unified debugging of front and backend using the browser dev tools. You can think of it as a fullstack REPL, if you like. Obvious applications include protyping, build previews from GitHub, running examples for bug reports, demonstrations, documentaion and education. It’s not a big leap to imagining StackBlitz providing the primary deployment mechanism for some fullstack web apps.

In case you can’t tell I’m really exited by StackBlitz WebContainers. I love the web with a geeky passion. I’m fascinated by developer tooling, workflows and system architectures (which often mirror company structures). In my career I’ve worked on embedded RTOSs, digital communications, systems code and full stack web.

WebContainers bring everything together in one powerful concept. I expect great things. What can you come up with?

16 Jan 2022

While Jekyll and liquid provide limited support for collections, it's surprisingly easy to merge a set of JSON files so they can be readily and efficiently accessed on the client.

Requirements

We have three similar projects under development at the W3C WAI. Each provides information on a set of items (course, authoring tools and evaluations tools) and users of our Jekyll SSG generated web ‘app’ wil be able to sort and filter the items.

A form is provided to submit new items that will undergo a review process before eventually being added to the dataset for future access in the app.

So that’s only two pages, assuming no pagination.

Architecture

The need for client side manipulation of the item list view (filter and sort) suggests the JSON data be available to the client.

It would be possible to keep all state in the DOM and manipulate that directly, touh that might be slow. It would also be possible to use the now common pattern of always rendering a view of the data in the client (as made popular by React).

However, as this is a very low complexity app other architectures might also be suitable without a risk of bugs due to complex interactions. For example, using matching data and HTML in the client.

Also, as the content will ony rarely change and Jekyll is a SSG it makes sense to pre-generate as much as possible, both data and HTML. Then custom client side behaviour (ie JavaScript code) can be minimised.

A final observation is that as we are using a SSG, data and HTML can be pre rendered and directly included in pages, rather than being fetched and rendered with JavaScript at runtime in the client.

The need for scalability and pagination might impact these decisions, but initially data sets are small.

This post highlight an approach to the json data SSG rendering that might be non obvious. Options for client side access to that data is the topic for another post.

Outline

The SSG build time approach is as follows:

- each item has it’s own json data file in a folder with the others

- the JSON files have a top level object (hash) s owe can access various fields

- generate a single JSON structure of the items as an array

- Jekyll is used to general a single JSON structure of items

- html for the items can be created in as similar way

- we can use Jekyll

includes to modularise the code

The data and initial HTML are directly embedded into the HTML files foreasy client access.

So at build time we would like to:

- access the JSON files as a collection

- sort the collection on a specific item field - eg name

- generate a single JSON structure from the files

- iterate over the collection, to generate HTML for example

Creating the JSON structure

The Jekyll documentation on using data files is a little lacking and some experimentation is needed. But it turns out to be really easy to generate the JSON from all the files.

Liquid has the concept of arrays, but provides a very restricted set of filters (functions) that create and operate on them. It also has the [n] access operator, plus first and last methods. Jekyll adds a few more useful filters like push.

Jekyll also adds hashes (objects) and some filters that work on them. Hashes are created for you in standard variables and there is no way to create or manipulate them. The . and [] operators are provide for getting values.

But we can do what we need to with these limited programming language features.

Attempt

Given our JSON files live in the _data directory, an initial attempt might be as trivial as using the variable site.data to access them as a collection. Then we can try things like

{{ site.data | sort: 'name' }}

or

{{ site.data.items | jsonify }}

and

{% for item in side.data %} ... {{ item.name }} ... {% endfor %}

{%- endraw -%}

But alas, results are not as expected. So with a little use of the inspect filter it turns out that:

site.data is a hash keyed by filename and with values that are file contents when parsed as json (based on file extension being .json)- the

jsonify filter shows an object (hash) with the same structure

- when iterating using

for each file item is converted into a 2-tuple of [key, value]

- sorting appears to work using the filename key

Solution

The solution is straightforward. As we don’t care about the filenames we can convert the hash to an array of the values. Then all the operation work as required. Here’s the code:

{% raw %}

# create an empty array and add the hash item values

# note push is non mutating

{% assign values = "" | split: "," %}

{% for item in site.data -%}

{% assign values = item[1] %}

{% assign values = values | push: value %}

{% endfor %}

Note there’s no “return” from includes so the output is any variables we set. In this case it’s values.

Now you can perform those operations we tried before:

{{ values | sort: "name" }}

{{ values | jsonify }}

{% for value in values %} ... {{ value.name }} ... {% endfor %}

As a optimisation you can put the code in an include, passing the data folder (you can use subfolders of _data to organise things) and a sort key.

{% include sort-data-folder.liquid data=site.data sortKey="name" %}

06 Jan 2022

The Svelte framework has several reactivity features: Assignments, Statements, Stores and Component Events. This post summarises the options and explores when you might use each one.

Reactivity

Reactivity is a coding “style” where when some data changes, other dependent data also gets updated, automatically. Perhaps the most common occurrence of this is in spreadsheets where changing a cell value causes all referencing cells to also update. This is often seen as a “push” semantic, compared to a “pull” sematic of calling a function.

Reactivity is often implemented using a version of the “publish / subscribe” (pubsub) pattern, where any number of subscribers can be updated with changes broadcast from a publisher.

Event systems such as DOM events provide one form of highly decoupled reactivity where an event (or message) is dispatched from one element and other elements may handle it. In the DOM the handler is a callback function.

Reactivity is not usually built into programming languages. JavaScript requires a library or framework feature. However, there is a Stage One TC39 Proposal to add an Observable type to JavaScript. This is based on the RxJS Reactive Extensions Library for JavaScript, which enables a style of programming called Functional Reactive Programming (FRP) or “streams”. Another popular reactive library for JavaScript is MobX state manager.

FRP is often referred to by the shorter Reactive Programming (RP) which is actually technically a bit different. FRP, like other functional programming styles, involves data flowing through small functions such as map and reduce. Code thus consists of declarative chains of expressions, compared to imperative lists of statements or object oriented methods operating on private data.

Svelte Reactivity

One of the highly satisfying features of the Svelte web app development framework is that reactivity is baked in (unlike React). Any assignment to a local variables is reactive and will cause the component to be re-rendered when the value changes.

<script>

let count = 0;

function handleClick () {

count = count + 1;

}

</script>

<button>

Clicked {count} {count === 1 ? 'time' : 'times'}

</button>

There are also three more explicit reactive features and while the excellent Svelte documentation describes each, it may not be obvious which one to use. None of these features go quite as far as FRP. But, they are nevertheless extremely powerful and easy to use when creating interactive web apps with Svelte.

Note that as with other JavaScript reactive libraries the $ symbol is conventionally used to identify reactive elements.

Reactive Statements

Statements can be marked as being reactive. These can be single line or block statements. The statement will be revaluated whenever any variable or properties directly referenced in it are changed. As with simple assignments, changes to will cause the enclosing component to be re-rendered.

<script>

let count = 0;

$: doubled = count * 2;

function handleClick() {

count += 1;

}

</script>

<button on:click={handleClick}>

Clicked {count} {count === 1 ? 'time' : 'times'}

</button>

<p>{count} doubled is {doubled}</p>

This form of reactivity is marked with the rarely used JavaScript label syntax using a variable name of $, ie lines of code that start with $:. To reference the value in a component the variable name is used directly, without any $.

Updates are synchronous such that code changing the source variable will return after all the referencing reactive statements are also updated.

Reactive assignments and their references are useful within code in a single file such as a Svelte component. For example, a event handler declared in the HTML section may update a variable. That variable can be referenced in a reactive statement in the script section to derive a new value. Then, the new value can referenced in the HTML as with a simple variable assignment. Then, whenever the event occurs the HTML content will be updated to match the new computed value.

See the tutorial and docs for details.

Reactive Stores

Svelte Stores are reactive and use the Observable pattern. They are similar to Observable types (see above). In Svelte the store is an observable which broadcasts changes to any code that is subscribed to it.

Stores are created using library functions and there are three types: readable, writable and derived. Readables are sources of data streams with sequential values being created in a callback. Writables may also be updated by external code using the set and update methods. Derived stores provide composition by computing and emitting values based on those from other stores when those are updated. Stores may be subscribed to by calling the subscribe method or the Svelte compiler provides syntactic sugar in the form of prefixing the store name with a $.

<script>

import { readable } from 'svelte/store';

export const time = readable(new Date(), function start(set) {

const interval = setInterval(() => {

set(new Date());

}, 1000);

return function stop() {

clearInterval(interval

const formatter = new Intl.DateTimeFormat('en', {

hour12: true,

hour: 'numeric',

minute: '2-digit',

second: '2-digit'

});

</script>

<h1>The time is {formatter.format($time)}</h1>

Updates can be synchronous or asynchronous depending on how the updating function is coded.

Stores are useful if you need asynchronous reactivity or want to share updates between code in many places, including components not in a parent-child relationship. For example, a component event handler can update a writable store and all subscribed components will be updated. They can also be used outside components. While Svelte doesn’t conceptually deal with FRP or streams it is easy to to integrate other observable libraries like RxJS as the APIs are standardised.

See the tutorial and docs for details.

Component Events

Svelte Component events provide a form of pubsub reactivity between child components and their parents. Like the DOM custom event object, which they use, they can be simple triggers or also pass data values (aka details). Unlike DOM events they do not use PostMessage to propagate around the DOM hierarchy, Rather, they directly call the component’s event handler for the event.

Events are created using the createEventDispatcher function. They are handled using the Svelte DOM event handler syntax on:event-name={handler}. A custom event name is supplied rather than a DOM event name. The handler will receive the event including any extended details provided by the dispatcher. A form of the event handler syntax is used to pass the event on to the parent without any processing.

# File Inner.svelte

<script>

import { createEventDispatcher } from 'svelte';

const dispatch = createEventDispatcher();

function sayHello() {

dispatch('message', {

text: 'Hello!'

});

}

</script>

<button on:click={sayHello}>

Click to say hello

</button>

# File App.svelte

<script>

function handleMessage(event) {

alert(event.detail.text);

}

</script>

<Inner on:message={handleMessage}/>

Events are synchronous. The component event handlers are called directly when the message is dispatched.

Component Events are useful when you want to use a decoupled event or message pattern, including to pass up the component tree.

See the tutorial and docs for details.

Further Info

11 Sep 2020

If you have a G Suite instance then sending emails from a backend using Gmail turns out to be really simple. Using the Gmail API rather than SMTP and enabling G Suite global delegation means you can simply send email as a G Suite user. Here's how I did it with Netlify Functions.

Email options for backend

In my previous post on the Tools with a Mission (TWAM) app I mentioned the need to send email notifications of various events. TWAM is a non profit usr of Google’s and all users requiring notifications have appropriate G Suite Gmail addresses. The app is using Google Sheets as the backend database and it makes sense to also use Gmail to send emails, rather than using yet another service.

It might seem obvious to just use the same SMTP API that email clients use to send and receive via gmail. However, a little research indicates this approach has issues, largely as Google are aggressive in disabling accounts which seem to be suspect, with little recourse to restoration. We obviously can’t risk that for a mission critical app.

As the TWAM app has a backend of Netlify Functions (which simplify AWS Lambda) running on nodejs, another sensible choice is using nodemailer which has a facade for Gmail. Again however, the documentation indicates problems with Gmail and it’s a heck of a lot of abstraction on top of the the Gmail APIs, with support for many other transports.

Thus direct Gmail use is my preferred solution, assuming it can be made to work with a reasonable “pain threshold”. As usual with Google APIs, the documentation is poor, confusing and often downright misleading due to multiple versions being around on the interwebs. Worse, when trying to find solutions in Stack overflow etc, well, “there be Dragons” as they used to say on old maps for unknown dangers.

But in the end after many, many, failed and very frustrating attempts the solution using the Gmail REST API directly is pleasantly simple. You just need to get all the parts lined up “just so”. Google provide a JavaScript API for node , but that is a monster monolith covering ALL Google’s APIs. I don’t expect so much deployed code will run up big costs on Netlify Serverless Functions, but the thought of using such a “Swiss Army Knife” binding leaves a nasty taste in my mouth. REST will do just fine, thank you; after all, it’s the way of the web.

Code

Reading up on the subject of using the Gmail API throws up several complexities that it turns out are just not required:

No need to get the user to authorise using an OAuth/OpenID flow

This is just as well because we want to send emails as the app backend, not a specific user who is logged into the app. To do so, we can set up a Google Service. This actually uses OAuth JWTs “under the bonnet” but it’s much simpler for us to use than the usual flows. The service use an email address for identification and a private key for security. We can use the individual google-auth-library package that is part of the Google JavaScript APIs. The final piece of the puzzle is to use a G Suite ‘Global Delegation’ setting to allow the Service to send emails as a user. This way, the app calls the REST API and Gmail sends the a email as the specified Gmail user. To set this up See the and ensure the correct scopes are enabled for sending emails (the used value of ‘https://mail.google.com/’ seems too course and can probably be refined).

No need to BASE64 encode content

While nearly all examples show the HTTP message body for the email being BASE64 encoded this appears uneccessary. You only need to specify the correct Content-Type header. Anyway, BASE64 encoding is certainly not part of the RFC 822 email specification. Perhaps we’ll hit some content that requires it but for now I’m only sending single part text content (and unicode works just fine).

No need to use NodeFetch or NAXIOS

google-auth-library includes a dependency on Gaxios, a version of AXIOS that works just fine for our purposes. It’s called via a wrapper that retries under some auth error circumstances.

The email functions

sendRawEmail calls the Gmail REST endpoint with appropriate security provided via Netlify variables in deployment or a .env shell environment configuration file during development.

sendEmail builds up a RFC 822 message body for common email fields and calls sendRawEmail.

// file - functions/_gmail.js

if (!process.env.NETLIFY) {y

// use .enc file for local dev and assume netlify variables in CI

require('dotenv').config()

}

if (!process.env.GOOGLE_SERVICE_ACCOUNT_EMAIL)

throw new Error('no GOOGLE_SERVICE_ACCOUNT_EMAIL env var set')

if (!process.env.GOOGLE_PRIVATE_KEY)

throw new Error('no GOOGLE_PRIVATE_KEY env var set')

if (!process.env.GMAIL_SENDING_USER)

throw new Error('no GMAIL_SENDING_USER env var set')

const { JWT } = require('google-auth-library')

// For this to work you must create a service and enable Domain wide delegation for the service

// https://developers.google.com/admin-sdk/directory/v1/guides/delegation

// Set env vars for the service key in GOOGLE_SERVICE_ACCOUNT_EMAIL & GOOGLE_PRIVATE_KEY

// GMAIL_SENDING_USER is the email address that the service delegates for

// ensure you set the used scopes here when enabling the global delegation

async function initServiceClient() {

return new JWT({

email: process.env.GOOGLE_SERVICE_ACCOUNT_EMAIL,

key: process.env.GOOGLE_PRIVATE_KEY.replace(/\\n/g, '\n'),

scopes: ['https://mail.google.com/'], // better to pick narrower scopes

subject: process.env.GMAIL_SENDING_USER,

})

}

// email body is to rfc822. (From: is ignored and GMAIL_SENDING_USER used ) eg

// To:

// Subject: An RFC 822 formatted message

//

// This is the plain text body of the message. Note the blank line

// between the header information and the body of the message.

exports.sendRawEmail = async function (emailBody) {

const client = await initServiceClient()

// see https://github.com/googleapis/gaxios

const options = {

method: 'POST',

url: `https://gmail.googleapis.com/upload/gmail/v1/users/me/messages/send`,

headers: {

'Content-Type': 'message/rfc822',

},

body: emailBody,

}

return await client.request(options)

}

const field = (f, v) => (v ? f + ': ' + v + '\r\n' : '')

exports.sendEmail = async function ({

to = undefined,

cc = undefined,

bcc = undefined,

subject = '',

message = '',

} = {}) {

if (!to) {

throw new Error('"To" field is required')

}

const email = `${field('Subject', subject)}${field('To', to)}${field(

'cc',

cc,

)}${field('bcc', bcc)}\r\n${message}`

return exports.sendRawEmail(email)

}

I also required a simple template for the emails. Something like mustache (or handlebars) is just fine. Lodash provides the necessary.

const template = require('lodash.template')

function parseTemplate(templ, data) {

const options = { interpolate: /{{([\s\S]+?)}}/g } // mustache style

const compiled = template(templ, options)

return compiled(data)

}

const notification = {

to: recipient.email,

subject: parseTemplate(email.subject, templData),

message: parseTemplate(email.message, templData),

}

sendEmail(notification)

25 Aug 2020

A new project with yet a different stack. This time it's a Jamstack static site with a serverless back end using most of Netlify's services. On this platform Eleventy provides templated page build with no front end framework in sight. Google Sheets is used as a database. This post explains some of the technical decisions made and challenges met.

Tools with a Mission (TWAM) recycle and refurbish tools, sewing machines and computers. It then sends them to the developing world for livelihood creation. Their processes are reasonably complex and currently use a mixture of Google Apps tools, including GMail and Sheets. I’m working in a small team to help move more of the process to an integrated ‘web app’ experience. This will support customer applications for tools, evaluation, approval and other steps through to shipping in containers.

This is a pretty standard setup of an app with forms plus a back office process which defines the workflow. Thus we looked at the usual candidates for forms and workflow. I personally find such systems to be a quite restrictive, especially if they only use a GUI and cannot be data or code driven. Given TWAM’s embedded use of Goggle Apps we also considered Google Action script which is basically a hosted JavaScript environment with some support for creating web apps and APIs.

In the end however, my very positive experience with using Netlify Deployments along with Eleventy for https://musicpracticetools.net meant I was keen to try more of the Netlify portfolio of serverless services. However, for the database, rather than the pNetlify partner noSQL Fauna, I decided to try using Google Sheets. Using sheets opens up the possibility of easy access to the data and ad-hoc processing using office software skills rather than full developer skills. In addition, Gmail is used for inter-person messaging and for automatic notifications. Again, why code a new messaging solution when a good one exists and fits in with people’s work patterns. So, the stack chosen for the proof of concept is:

- Eleventy with nunjucks templates

- Good ol’ HTML, CSS and Javascript - ie no framework

- Google Sheets API for database

- Gmail API for notifications

- Netlify deploys, forms, functions, identity and role based gating

- Netlify dev - not really part of the stack but excellent

This stack supports a representative slice of functionality including user management, form filling with editing and notifications. The proof of content flow is that someone makes an application and can check back later to see if successful Someone else reviews a subset of applications filtered by country and adds evaluation notes and assigns an accept/reject status.

Tricky areas

I’d already ironed out any small issues with Eleventy and nunjucks in my last project so just used the same config again. Netlify deploys are really smooth to use with github integration and pretty much just work; as do the forms which invoke a function when submitted and also functions which wrap AWS Lamda, making them a “doddle” to use.

The fun and games came with Google Sheets and Netlify identity used in conjunction with the role based gating.

Google Sheets

Google provide a REST API for Sheets and also the Action Script environment with support for creating HTTP APIs. They also maintain a npm package binding JavaScript to ALL their APIs, though there is a simpler independent package just for sheets. We can most easily call the JavaScript binding from our Netlify Functions rather than poking the REST API via fetch. I decided to use the simpler google-spreadsheet package, though it turns out I might switch to the Google monster package as it supports batching API calls for efficiency.

But we soon hit an major issue. For some reason the latest v4 of the Google Sheets API does not support any filtering when fetching sheet cells. And v3 is about to be dropped. That’s obviously a scalability problem! We don’t want to load all columns and rows into memory for processing. I looked at using Action Script to create an API which does work but there are two issues here. 1) the script have to be edited in Google’s in browser editor which is a terrible experience compared to something like VS Code. Perhaps more serious is the fact that in order to expose the API endpoint the Google Apps setting to allow anyone to share items publicly must be enabled. That’s a potential security issue and might not be welcome.

After much head scratching I found a work around to this. YOu can use a sheet formula to process the sheet data as required and return the filtered row set. Further more, I decided to reduce coupling and error possibilities, that rather than keeping the formula in the spreadsheet we’d push it from the app and read the results back. In addition, in order to have a have chance of handling multiple accesses at once a new sheet is created for the formula and then deleted. So the process to get a filtered row set is

- Create a new worksheet in the spreadsheet

- Push the Formula to a cell so it runs

- Fetch the results

- Delete the worksheet

This works well but does seem a little “hacky” and I still need to test with multiple simultaneous updates. It is also slow, taking around 4s. This appears to be purely communication time with so many round trips. Adding a thousand rows to the sheet makes little difference to the timing. The official Google npm package provides batching mode which should speed things up. You need some good sheet formula skills but can develop in the sheet and move to JavaScript once it all works. In order to further reduce coupling and hardcoded values the formula returns the size of the result set and column names as well as the data itself to the app. The app can read that data first and then use it to fetch the correct cells.

Netlify Identity and Role Based Gating.

Identity along with account management is always a complex topic. Fortunately, Netlify provide a register / login widget that is perfectly serviceable, though nowhere near as flexible or polished as the one from Auth0. I found I could improve the UX to my liking with a little script, though have not yet succeeded with the CSS tweaks I want. Identity is based on stateless JWT tokens as you’d pretty much expect these days. These are flexible and you can pass the user’s id_token to your Functions to provide access control and user info. This way, Netlify manages the users and their details are passed around the front and back ends in the tokens.

The feature that I really appreciate is Role Based gating. This means access to specific app page URIs is controlled declaratively right on the CDN edge almost without any code required. When a user registers and logs in a Function is called and this can return the users roles. Then a config file is used to define which roles are required per URI. And it just works.

Well almost. I hit a lot of strange behavours and errors with access, including an apparent failure when logging in on another browser when still logged in. It turned out to be down to my limited cognitive model of how the pieces work together, not helped by very limited documentation. The gating and identity work differently but do get synchronised if you are careful. This is where Netlify’s Community forum came to the rescue in the form of one Jon Sullivan. As a volunteer Jon is incredibly knowledgeable and definitely goes the extra mile, and then some. After a long an fascinating discussion covering how authentication and gating interact and how to handle refresh tokens I now have code which is reliable. It just took a very long time to get there. But we did get a 100+ post thread going, FTW.

Summary

A important point I should make clear is that ALL this functionality from Netlify is available on the free plan! Though, Forms do have their own pricing model after 100 submissions. That’s very impressive indeed and perfect for a charity with very little cash to spare.

The stack threw me a few big challenges but now it is working well and I think will scale well to the final app.

The code is available at https://github.com/OpenDirective/TWAM-App