Text line endings on Windows: Still painful after all these years

Once upon a time, in the days of Microsoft MS-DOS development one of main pain points and source of bugs was the distinction between text and binary files. When you opened a file you had to say if it was to be accessed in binary or text mode. In text mode the file content was translated when you read or wrote a string of text, meaning you had to know the file contents and use the correct mode. Presumably this was done to keep files smaller as the translation was 2 specific characters in memory mapped to 1 specific character in the file. Fortunately this translation on rad or write issue has mostly completely disappeared now those 2 characters are stored in files by Windows. But the legacy of those 2 pesky characters still causes pain whenever developers share files on multiple platforms such as Windows, Linux and OS X.

A legacy from MS-DOS days lurks in Windows

Shake your carriage

The characters in question are used to mark the end of each line of text (except if automatic text wrapping occurs). You don’t see them but they’re lurking there waiting to catch you out, especially when sharing files between OSs or when using version control.

These 2 characters are technically the ASCII control characters for Carriage Return (CR) and Newline or Line Feed (LF). Note that control characters are a special group that rather than being printed invoke some sort of action. They hark back to the days of Teletype printers where CR would make the print head scoot back to the start of the line (carriage being the mechanism carrying the print head) and LF would move the print head down a line with out effecting the horizontal position. Thus, whenever a new line needed to be started a CR+ LF pair would be sent to the Teletype.

These character are represented in various ways in text files and programs, in ASCII or UNICODE:

- CR

- 0x0D hex

- “\r” in strings

- Ctrl M or ^M

- LF

- 0x0A hex

- “\n” in strings

- Ctrl J or ^J

We’ve kept this ancient legacy so that the end of every text line (newline) is marked by these characters. Actually, that’s not exactly true. Rather, each OS uses a different set of characters and that is the root cause of the problem,

- Linux uses LF only

- Windows sticks with CRLF

- OS X for a while used CR only but now uses LF

As a quick aside, you can discover a file’s line endings by using the “file” command that comes with Linux tools for Windows like “Cygwin” or “Git for Windows“. If any line endings are not LF it will tell you. You can also use editors like venerable notepad++ which also lets you change the line ending format.

Return to the future

Life gets complicated when you need to share text files between these OSs, either directly (eg via network access) or by copying files, perhaps via version control tools. You can try to perform translation to the native format whenever you copy or have tools that support either end of line. The danger with the later approach is not processing all text files or ending up with files with mixed line endings. Mixed line endings will confuse tools that often only check the start of files to determine line ending format. In either case, you’ll likely to get strange effects in editors such as joined lines or funny characters (eg ^M).

This problem surfaces quite often now with open source development where contributors can be using any tools on any OS. In addition to sharing files via version control, developers sometime access files share files between a VM and the host OS without checking out to each.

So perhaps the best approach is to standardise on a single format for all your files, namely LF. Fortunately these days most Windows programs that developers use support the LF only style, whether they are Windows native or ports of Linux tools. The notable exception is dear old notepad, which still insists on a CRLF pair to end each line (not doubt as it’s just a “souped up” edit control and Windows use CRLF natively).

There are of course still issues and the ubiquitous git version control is one culprit you are almost certain to stumble across.

Make sure you git the right newlines

By default git assumes that your workspace files will use the OS native newline format for all text files. It will also try to auto detect text files. Internally however, git uses LF only (usually) and translates on Windows during checkin and checkout. This is configured by the “core.eol” and “core.autocrlf” settings which default to “CRLF” and “true” on Git for Windows. These are hardcoded and not set in any of the usual git config files.

On the face of it this is good as you get OS specific end of lines on each platform, but only if you always check out to the operating system you are working on. However, as noted above, developers often share files across OSs so unless they standardise on a single format they’re likely to hit problems.

If you want to use LF universally for your project you need to configure git appropriately. These days that is pretty easy using gitattributes, usually in a .gitattributes file at the root of your project working tree. This overrides any –global, –system or local config settings thereby ensuring a consistent experience in the project. You might possibly need to specify –local config settings as well as some .gitattribute options fallback to those.

The catch, just as in those MS-DOS days, is that you must not translate anything if the file is not pure text but is some other “binary” format, eg non XML based word processor files. If you translate these files you corrupt them, “simples”. Accordingly, git tries to auto detect text files but you can also explicitly declare which files are to be treated as either text or binary.

Gitting practical

This leads to 2 approaches to using LF everywhere:

- Tell git to never translate anything

- Tell git to always convert to LF in your workspace

Never translate

The first option seems safe but you’ll have to ensure all text files you [potentially] wish to share only ever contain LFs. That means making sure editors and other tools never use a CRLF when creating the file or editing lines. Not easy when CRLF is still the native Windows line ending.

Enter EditorConfig to the rescue! This is a standard configuration file supported by many editors and that specifies format options including line endings. Thus, developers get a consistent editing experience and files are created the same way whatever editor or IDE they use. Some editors support EditorConfig directly and others have plugins. For example, the Visual Studio extension supports most options including line endings, but currently the Visual Studio Code extension only supports indent style so is no use here.

The way to stop git translating anything is to use a .gitattributes entry of “* -text“. This simply says nothing should be treated as text. You can always override for specific filename patterns, for example “*.txt eol=lf”.

The other thing you can do is to ensure your development workflow includes a check for CRLF line endings. For example, you can check all files, including binary, using something like “grep –Url $‘\x0D’ *” in “Git for Windows”. This will return 0 if any matches, 1 otherwise.

Always LF

Alternatively, you may want to use the second option of having git translate line endings to LF in your workspace. But, bear in mind it only translates on checkout. Thus any CRLFs will remain in your workspace until you go though a complete checkin/checkout cycle. Once again you’ll probably want to use EditorConfig to specify LF end of lines for all new writes.

To get CRLFs translated you’ll need to force git to checkout your files over the existing copies as by default it doesn’t want to. Otherwise you can leave your workspace in an strange intermediate state that is different from what anyone will experience when they clone or checkout the code. This could potentially be a source of hard to track bugs (though most unlikely). If you use Continuous Integration in your workflow then any potential problems will be quickly found.

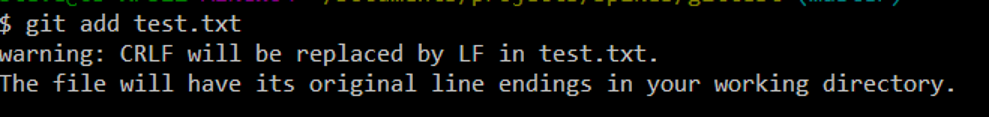

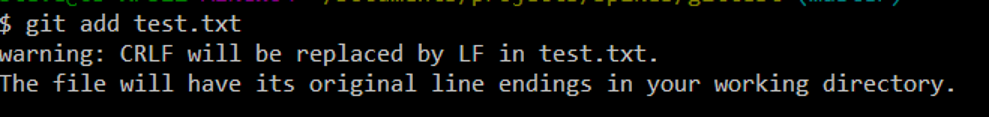

To be fare, git gives a loud warning when you are in state when a checkout will change the line endings. However that error is slightly confusing.

Git warning when line endings are not yet translated.

Git and editors may also complain about the mixed line endings issue described above.

To configure git for this option use “* eol=lf” in .gitattributes. As this will force all files to be treated as text and so converted on checkin make sure you explicitly mark any binary files with lines like “*.png binary”. If you don’t then you checked in file may be corrupt and you may not notice for some time and be stuck with a hard to fix problem.

Note when you first set this option you’ll probably get a load of warnings and all files will appear to change. See the notes on .gitattributes end-of-line conversion for the steps to overcome this.

Coming soon

A gitattributes option to support “* text=auto eol=lf” has been discussed. This would turn on auto textfile detection and then use LF end of lines for any text files. Currently the “eol=lf” options turns on text handling for all files and so you need to carefully declare all binary files. That’s good practice any way, as no doubt git could incorrectly detect, but at least it would not be critical. We should push for this option.

By the way, Editor Config should soon support a “end_of_line=native” option that will use whatever line ending makes sense according to the OS. That will play better with the default git behaviour but doesn’t help when files are shared without checkout such as in VMs.